Usecase:

Here, we will see how we can download the import Payables Invoices report. That is we will download report equivalent .xml file which contains same details like the report.

Why do we need :

Note: By default, when we do import AP standard invoice and enabled callback, using import payable invoice process request id if we download ess job exec details, it will only give a .log file but not actual execution report.

High level steps:

- Create an app driven intregation and configure to subscribe callback event

- If summarystatus and import payables invoice succeeded, store the import payables invoices request id to a variable

- Call cloud ERP adapter and do submitESSJobRequest operation of ErpIntegrationService to run the report and get the request id as response.

- Take a while loop and check getESSJobStatus

- Once submitESSjob gets success, downloadESSJobExecutionDetails using cloud erp adapter

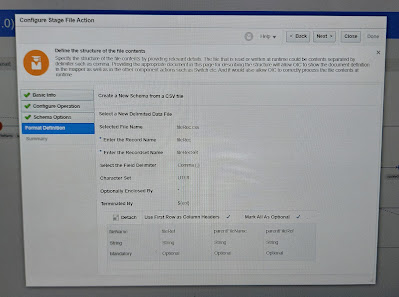

- Write the zipped file to stage location using opaque schema

- Unzip the files using stage

- Map the xml file(putting a predicate condition as fileType='xml' in xpath) to send as Notification attachment.