Usecase: Here, we will create a reusable integration using which we can fetch the CSV Files (CSV file and and any zip file contents) from AWS s3 based on file directory and file pattern using AWS S3 rest.

Rest response json format:

{

"fileReferences":[{

"base64FileReference":"base64data",

"fileName":"test.csv",

"parentFileName":"",

"base64ParentFileReference":""

}],

"ExceptionCode":"",

"ExceptionReason":"",

"ExceptionDetails":"",

"OutputCreatedFlag": ""

}

Brief steps:

- Get list of files from Aws s3 rest

- For each file check if csv or zip

- If csv , download the file ref and store to a stage file and later map the response to response as base64 encoded.

- If zip, download the zip file ref and

- If unzipflag= N, write the ref to stage file and later on map the response as base64 encoded.

- Otherwise, unzip it, for each file , write to a stage file as ref and latet on map all files ref to response as base64 encoded.

Here, we also put a retry logic to get list of files from s3.

Implementation Logic steps:

- Create a AWS S3 rest trigger and invoke connection

- Provide rest api base url like https://host.s3.us-west-2.amazonaws.com

- Security as AWS Signature version 4

- Provide access key, secrect key, AWS Region, Service name

- Create an app driven integration and configure the trigger as below

- Pass Directory name as template param

- Pass unzipFlag and filePattern as Query Param

- Provide a Json response to send file references and file names.

- Take a bodyscope and assign all the Global variables required.

- varDirectory : replaceString(directoty,"_","/")

- varFileName : fileRef.csv

- varWriteOut : /writeOut

- varObjectCount : 0

- varUnzipDirectories : concat("/unzip",$varObjectCount)

- varFilePatternS3 : concat("Contents[?contains(Key,","",filePattern,"",")]")

- varOutputCreated : N

- varS3FileStatus : error

- varS3Counter : 0

- Get list of files from the S3 directory:

- Take a while action to retry logic and loop it 3 times $varS3FileStatus ="error" and $varS3Countet < 3.0

- Take a scope

- Configure rest endpoint to get the list of files

- Verb: get

- Resource uri: /

- Add 4 query params

- list-type

- prefix

- delimiter

- query

- Provide ListBucketResult XML as payload response.

- Map the directory and file pattern

- Assign success status for the loop for success case

- Go to file handler and assign increment the counter.

- Adding a global variable to do data stitch the list of files response.

- Iterate over each bucket contents or each list file

- Update object count

- varObjectCount : $varObjectCount +1

- varUnzipDirectory : concat("/unzip",$varObjectCount)

- varParentFileName: substring-after(Key,$varDirectory)

- If CSV file (contains($varParentFileName,".csv")="true"

- Call s3 rest endpoint and configure below:

- Resource uri: /{fileName}

- Verb: Get

- Response as Binary Application/octet-stream

- Map the Contents Key to fileName.

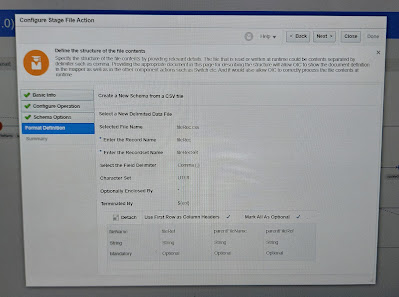

- Write the file stream references to stage file as contents.

- Update varOutPutCreated = Y

- If ZIP file(contains($varParentFileName,".zip")="true"

- Call s3 rest endpoint and configure below:

- Resource uri: /{fileName}

- Verb: Get

- Response as Binary Application/zip

- Map the Contents Key to fileName.

- IF check unzipFlag = N

- Write Zip file ref to stage csv file as contents

- Update varOutputCreated as Y

- Otherwise

- Unzip the zip file using stage

- For each unzip file

- Write file reference to stage file as contents

- Update varOutputcreated as Y

- If $varOutputcreated = Y

- Read the stage file reference and map the all csv file base64 contents to response

- Otherwise

- No file, just update varOutputcreated as N

- Add scope fault to response exception code, reason and details under default fault handler

Implementation steps (with screenshots):

Create AWS S3 rest connection:

Rest trigger configure:

Assign Globals:

Get list of files from S3: