Usecase: Here, we will see how to call ERP rest API and import FBDI data and also check import status.

Here., we will import supplier data.

References:

Follow below oracle page for Rest api details:

https://docs.oracle.com/en/cloud/saas/financials/24b/farfa/op-erpintegrations-post.html

https://docs.oracle.com/en/cloud/saas/financials/24b/farfa/op-erpintegrations-operationname-get.html

ImportBulkData Operation:

Sample Request body:

{

"OperationName":"importBulkData",

"DocumentContent":"UEsDBBQAAAAIAHSsJUWkOHOwEwEAAHgDAAAXAAAAQXBJbnZvaWNlc0ludGVyZmFjZS5jc3bV0LFOwzAQBuAdiXewOjBdU5/ts+MxNICCIEKEIjEG4SEijUrsCMHT47ZEqpCyIBj6Tzf8urM/JETOORSXV+x88E3nvGerrgmwvMmK2wrGgraWJwIER7VAseAGgvPBdcH1m77xjj3X3Ss7Y6EffAAtSBmEVFhjJKyqHN4AqoeszLP7HID0HEmmljRk5RMU67V7aergIGa8IHE/x1NxPhwngsBjBBdG8jlCAn+XizI/PdlJSDtNNRZQUnzI90f4SLWph5aFiOR637qPSJRK5JBKo8SeqD4kknoutFWpmiCKa39LhAqJ4vp/IlJymmgskP5JNNsaPTauZXcJW9b+3bUtsOs+mW2ljKQolRKpndTn0Ut9AVBLAwQUAAAACAAze2pIXdvnRHQAAACpAAAAEQAAAEFQVEVTVC5QUk9QRVJUSUVTdYrBCoMwEER/Reg1sPQTQgklYFVoatvjNqSwEDYhK4J/b9CrXmYeMy8V9DEA5iwQROBPjOwJo0DGBX8xCBDPiXyFqSAL+okSi9LDx9rH4Co483Tq0r3aVo0k9W36HApu3r6fpu3G3t5Mc9fOvPX3wLjuvQJQSwECFAAUAAAACAB0rCVFpDhzsBMBAAB4AwAAFwAAAAAAAAAAACAAAAAAAAAAQXBJbnZvaWNlc0ludGVyZmFjZS5jc3ZQSwECFAAUAAAACAAze2pIXdvnRHQAAACpAAAAEQAAAAAAAAAAACAAAABIAQAAQVBURVNULlBST1BFUlRJRVNQSwUGAAAAAAIAAgCEAAAA6wEAAAAA",

"ContentType":"zip",

"FileName":"APTEST_0310.zip",

"DocumentAccount":"fin$/payables$/import$",

"JobName":"oracle/apps/ess/financials/payables/invoices/transactions,APXIIMPT",

"ParameterList":"#NULL,Vision Operations,#NULL,#NULL,#NULL,#NULL,#NULL,INVOICE GATEWAY,#NULL,#NULL,#NULL,1,#NULL",

"CallbackURL":"#NULL",

"NotificationCode":"10",

"JobOptions":"InterfaceDetails=1,ImportOption=Y ,PurgeOption = Y,ExtractFileType=ALL"

}

Sample Response body:

{

"OperationName":"importBulkData",

"DocumentId":null,

"DocumentContent":"UEsDBBQAAAAIAHSsJUWkOHOwEwEAAHgDAAAXAAAAQXBJbnZvaWNlc0ludGVyZmFjZS5jc3bV0LFOwzAQBuAdiXewOjBdU5/ts+MxNICCIEKEIjEG4SEijUrsCMHT47ZEqpCyIBj6Tzf8urM/JETOORSXV+x88E3nvGerrgmwvMmK2wrGgraWJwIER7VAseAGgvPBdcH1m77xjj3X3Ss7Y6EffAAtSBmEVFhjJKyqHN4AqoeszLP7HID0HEmmljRk5RMU67V7aergIGa8IHE/x1NxPhwngsBjBBdG8jlCAn+XizI/PdlJSDtNNRZQUnzI90f4SLWph5aFiOR637qPSJRK5JBKo8SeqD4kknoutFWpmiCKa39LhAqJ4vp/IlJymmgskP5JNNsaPTauZXcJW9b+3bUtsOs+mW2ljKQolRKpndTn0Ut9AVBLAwQUAAAACAAze2pIXdvnRHQAAACpAAAAEQAAAEFQVEVTVC5QUk9QRVJUSUVTdYrBCoMwEER/Reg1sPQTQgklYFVoatvjNqSwEDYhK4J/b9CrXmYeMy8V9DEA5iwQROBPjOwJo0DGBX8xCBDPiXyFqSAL+okSi9LDx9rH4Co483Tq0r3aVo0k9W36HApu3r6fpu3G3t5Mc9fOvPX3wLjuvQJQSwECFAAUAAAACAB0rCVFpDhzsBMBAAB4AwAAFwAAAAAAAAAAACAAAAAAAAAAQXBJbnZvaWNlc0ludGVyZmFjZS5jc3ZQSwECFAAUAAAACAAze2pIXdvnRHQAAACpAAAAEQAAAAAAAAAAACAAAABIAQAAQVBURVNULlBST1BFUlRJRVNQSwUGAAAAAAIAAgCEAAAA6wEAAAAA",

"FileName":"APTEST_0310.zip",

"ContentType":"zip",

"FileType":null,

"DocumentAccount":"fin$/payables$/import$",

"Comments":null,

"ProcessName":null,

"LoadRequestId":null,

"JobPackageName":null,

"JobDefName":null,

"ReqstId":"14258",

"RequestStatus":null,

"JobName":"oracle/apps/ess/financials/payables/invoices/transactions,APXIIMPT",

"ParameterList":"#NULL,Vision Operations,#NULL,#NULL,#NULL,#NULL,#NULL,INVOICE GATEWAY,#NULL,#NULL,#NULL,1,#NULL",

"NotificationCode":"10",

"CallbackURL":"#NULL",

"JobOptions":"InterfaceDetails=1,ImportOption=Y ,PurgeOption = Y,ExtractFileType=ALL",

"StatusCode":null,

"ESSParameters":null,

"links":[

{

"rel":"self",

"href":"https://servername.fa.us2.oraclecloud.com/fscmRestApi/resources/11.13.18.05/erpintegrations/importBulkData",

"name":"erpintegrations",

"kind":"item"

},

{

"rel":"canonical",

"href":"https://servername.fa.us2.oraclecloud.com/fscmRestApi/resources/11.13.18.05/erpintegrations/importBulkData",

"name":"erpintegrations",

"kind":"item"

}

]

}

GetEssJobStatus Operation

Sample Response body:

{

"items": [

{

"OperationName": "getESSJobStatus",

"DocumentId": null,

"DocumentContent": null,

"FileName": null,

"ContentType": null,

"FileType": null,

"DocumentAccount": null,

"Comments": null,

"ProcessName": null,

"LoadRequestId": null,

"JobPackageName": null,

"JobDefName": null,

"ReqstId": "14557",

"RequestStatus": "SUCCEEDED",

"JobName": null,

"ParameterList": null,

"NotificationCode": null,

"CallbackURL": null,

"JobOptions": null,

"StatusCode": null,

"ESSParameters": null,

"links": [

{

"rel": "self",

"href": "https://servername.fa.us2.oraclecloud.com/fscmRestApi/resources/11.13.18.05/erpintegrations/getESSJobStatus",

"name": "erpintegrations",

"kind": "item"

},

{

"rel": "canonical",

"href": "https://servername.fa.us2.oraclecloud.com/fscmRestApi/resources/11.13.18.05/erpintegrations/getESSJobStatus",

"name": "erpintegrations",

"kind": "item"

}

]

}

],

"count": 1,

"hasMore": false,

"limit": 25,

"offset": 0,

"links": [

{

"rel": "self",

"href": "https://servername.fa.us2.oraclecloud.com/fscmRestApi/resources/11.13.18.05/erpintegrations",

"name": "erpintegrations",

"kind": "collection"

}

]

}

Implemented steps:

- Create REST API connection using ERP rest URL and provide security as basic autentication : user name and password.

- First list the files from a ftp directory using FTP connection

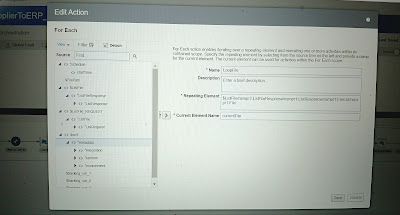

- Put a for each action to repeat for each list files.

- Read file using ftp adapter connection. Provide a sample csv file. Map the currentFile >> directory and filename to ftp read request.

- Take a stage write supplier fbdi tample format file. Filename: PozSupplierint.csv. sample csv file C1 to C156. Map the required columns.

- Zip the file using stage. PozSupplierInt.zip

- Configure rest call for import bulk data.

- Rest URI: /fscmRestApi/resources/11.13.18.05/erpintegrations

- Verb: post

- Configure request and response json files.

- Map the required values.

- Assign a status variable with value "NOT_SUCCEEDED".

- While loop to check whether the import status success. Condition : $status != "SUCCEEDED"

- Configure REST endpoint to check the import status:

- URI: /fscmRestApi/resources/11.13.18.05/erpintegrations

- Verb: GET

- Add a query parameter: finder

- Provide a json payload for response.

- Map the import bulk data response >> Reqst Id to finder

- Finder = concat("ESSJobStatusRF;requestId=",ReqstId)

- Update status = RequestStatus

Detailed screenshots:

Create Rest connection:

Integration flow:

Get ess job status check.

Notes:

1. while invoking for importBulk data, we need to keep in mind that interface details number = 24 for supplier import else it will not find the proper supplier control file in ERP.

2. Supplier fbdi template:

3. Suppllier source file sample:

SupplierSource.csv

Action,SupplierName,SupplierNumber,AlternateName,OrgType,InactiveDate,SupplierType,Relationship,Permanent,Country,TaxID

CREATE,3M,Company123,3221,3M,Corporation,09-10-2014,Supplier,SPEND_AUTHORIZED,N,US,410417775

4. We can also do other operations like uploadfiletoUCM, submitESSJobRequest,exportbulkdata, purge interface table etc.