Use Case:

OIC - Splitting Fixed-Length File Based on batch header Terminal Numbers into 2 separate files using xslt mapping.

In integration workflows, processing fixed-length files is a common requirement. A typical fixed-length file might contain hierarchical data structured as:

- 1. File Header: Represents metadata for the file.

- 2. Batch Header: Denotes the start of a batch, including terminal-specific identifiers (e.g., 001 or 002).

- 3. Detail Records: Contains individual transaction or data entries for each batch.

- 4. Batch Trailer: Marks the end of a batch.

- 5. File Trailer: Marks the end of the entire file.

Problem Statement:

Given a fixed-length file structured as above, the requirement is:

Identify Batch Headers containing specific terminal numbers (e.g., 001, 002).

Split the file into separate outputs based on these terminal numbers.

Transform each split batch into a target file format for further processing.

Example Input File:

File Header

Batch Header (001 Terminal)

Detail

Detail

Batch Trailer

Batch Header (002 Terminal)

Detail

Detail

Batch Trailer

File Trailer

Expected Output:

File 1: Contains data related to 001 terminal.

Batch Header (001 Terminal)

Detail

Detail

Batch Trailer

File 2: Contains data related to 002 terminal.

Batch Header (002 Terminal)

Detail

Detail

Batch Trailer

Solution Overview:

1. File Parsing: Read the the fixed-length file as csv sample file.

2. Get batch header position: identify the positions of Batch Headers with terminal numbers 001 and 002.

3. Splitting Logic: Extract data between Batch Header and Batch Trailer for each terminal number 001 and 002 respectively using the positions fetched in step2.

4. Read splited fixed length files: using nxsd, read the files.

3. Transformation: Convert the split content into the desired target file format (e.g., XML or JSON).

4. Output Generation: Write the transformed content into separate output files.

This solution ensures modular processing of hierarchical data, enabling seamless integration into downstream systems.

Xslt code Used for getting the batch header position for 001 and 002:

<xsl:template match="/" xmlns:xsl="http://www.w3.org/1999/XSL/Transform" xml:id="id_11">

<nstrgmpr:Write xml:id="id_12">

<ns28:RecordSet>

<xsl:variable name="CircleKPosition">

<xsl:for-each select="$ReadSourceFile/nsmpr2:ReadResponse/ns26:RecordSet/ns26:Record" xml:id="id_48">

<xsl:choose>

<xsl:when test="contains(ns26:Data, "RH") and (substring(ns26:Data, 23, 3) = "001")">

<xsl:value-of select="position()" />

</xsl:when>

</xsl:choose>

</xsl:for-each>

</xsl:variable>

<xsl:variable name="VangoPosition">

<xsl:for-each select="$ReadSourceFile/nsmpr2:ReadResponse/ns26:RecordSet/ns26:Record" xml:id="id_48">

<xsl:choose>

<xsl:when test="contains(ns26:Data, "RH") and (substring(ns26:Data, 23, 3) = "002")">

<xsl:value-of select="position()" />

</xsl:when>

</xsl:choose>

</xsl:for-each>

</xsl:variable>

<ns28:Record>

<ns28:CircleK>

<xsl:value-of select="$CircleKPosition" />

</ns28:CircleK>

<ns28:Vango>

<xsl:value-of select="$VangoPosition" />

</ns28:Vango>

</ns28:Record>

</ns28:RecordSet>

</nstrgmpr:Write>

</xsl:template>

Xslt code for spliting for 001 file : same way we have to do for 002.

<xsl:template match="/" xml:id="id_175">

<nstrgmpr:Write xml:id="id_17">

<ns31:RecordSet xml:id="id_56">

<xsl:choose xml:id="id_59">

<xsl:when test="number($WriteBatchHeaderPositions_REQUEST/nsmpr3:Write/ns32:RecordSet/ns32:Record/ns32:CircleK) < number($WriteBatchHeaderPositions_REQUEST/nsmpr3:Write/ns32:RecordSet/ns32:Record/ns32:Vango)" xml:id="id_60">

<xsl:for-each select="$ReadSourceFile/nsmpr2:ReadResponse/ns28:RecordSet/ns28:Record[position() < number($WriteBatchHeaderPositions_REQUEST/nsmpr3:Write/ns32:RecordSet/ns32:Record/ns32:Vango)]" xml:id="id_61">

<ns31:Data>

<xsl:value-of select="ns28:Data" />

</ns31:Data>

</xsl:for-each>

</xsl:when>

<xsl:when test="number($WriteBatchHeaderPositions_REQUEST/nsmpr3:Write/ns32:RecordSet/ns32:Record/ns32:CircleK) > number($WriteBatchHeaderPositions_REQUEST/nsmpr3:Write/ns32:RecordSet/ns32:Record/ns32:Vango)" xml:id="id_62">

<xsl:for-each select="$ReadSourceFile/nsmpr2:ReadResponse/ns28:RecordSet/ns28:Record[position() >= number($WriteBatchHeaderPositions_REQUEST/nsmpr3:Write/ns32:RecordSet/ns32:Record/ns32:CircleK)]" xml:id="id_63">

<ns31:Data>

<xsl:value-of select="ns28:Data" />

</ns31:Data>

</xsl:for-each>

</xsl:when>

</xsl:choose>

</ns31:RecordSet>

</nstrgmpr:Write>

</xsl:template>

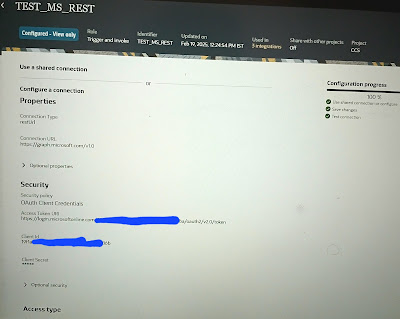

Screenhots:

For getting batch header positions

For splitting the content.